前些天发现了一个巨牛的人工智能学习网站,通俗易懂,风趣幽默,忍不住给大家分享一下。点击跳转到网站:https://www.captainai.net/dongkelun

官方文档

版本

- Flink 1.15.3

- CDC 2.3.0

- Oracle 11G 12C (官网说支持19,未测试)

Jar包

https://repo1.maven.org/maven2/com/ververica/flink-sql-connector-oracle-cdc/2.3.0/flink-sql-connector-oracle-cdc-2.3.0.jar - 2.1-3.0 : https://repo1.maven.org/maven2/com/ververica/flink-sql-connector-oracle-cdc/

- 3.1+ : https://repo1.maven.org/maven2/org/apache/flink/flink-sql-connector-oracle-cdc/

Oracle 安装

Docker 安装 Oracle 11G

Docker 安装 Oracle 12C

开启归档模式

1 | -- 1. 关闭数据库 |

创建表空间、用户并赋权

这里参考官网即可1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30CREATE TABLESPACE logminer_tbs DATAFILE '/u01/app/oracle/oradata/xe/logminer_tbs.dbf' SIZE 25M REUSE AUTOEXTEND ON MAXSIZE UNLIMITED;

CREATE USER flinkuser IDENTIFIED BY flinkpw DEFAULT TABLESPACE LOGMINER_TBS QUOTA UNLIMITED ON LOGMINER_TBS;

GRANT CREATE SESSION TO flinkuser;

GRANT SET CONTAINER TO flinkuser;

GRANT SELECT ON V_$DATABASE to flinkuser;

GRANT FLASHBACK ANY TABLE TO flinkuser;

GRANT SELECT ANY TABLE TO flinkuser;

GRANT SELECT_CATALOG_ROLE TO flinkuser;

GRANT EXECUTE_CATALOG_ROLE TO flinkuser;

GRANT SELECT ANY TRANSACTION TO flinkuser;

GRANT LOGMINING TO flinkuser;

GRANT ANALYZE ANY TO flinkuser;

GRANT CREATE TABLE TO flinkuser;

-- need not to execute if set scan.incremental.snapshot.enabled=true(default)

GRANT LOCK ANY TABLE TO flinkuser;

GRANT ALTER ANY TABLE TO flinkuser;

GRANT CREATE SEQUENCE TO flinkuser;

GRANT EXECUTE ON DBMS_LOGMNR TO flinkuser;

GRANT EXECUTE ON DBMS_LOGMNR_D TO flinkuser;

GRANT SELECT ON V_$LOG TO flinkuser;

GRANT SELECT ON V_$LOG_HISTORY TO flinkuser;

GRANT SELECT ON V_$LOGMNR_LOGS TO flinkuser;

GRANT SELECT ON V_$LOGMNR_CONTENTS TO flinkuser;

GRANT SELECT ON V_$LOGMNR_PARAMETERS TO flinkuser;

GRANT SELECT ON V_$LOGFILE TO flinkuser;

GRANT SELECT ON V_$ARCHIVED_LOG TO flinkuser;

GRANT SELECT ON V_$ARCHIVE_DEST_STATUS TO flinkuser;

创建测试表

Source

1 | CREATE TABLE FLINKUSER.CDC_SOURCE ( |

1 | INSERT INTO FLINKUSER.CDC_SOURCE (ID, NAME )VALUES(1, '1'); |

Sink

1 | CREATE TABLE FLINKUSER.CDC_SINK ( |

CDC Oracle2Oracle

1 | set yarn.application.name=cdc_oracle2oracle; |

注意:

- Oracle CDC SQL 中的 字段名称、database-name、schema-name、table-name都要大写,否则会有问题。

- Source 和 Sink表都要有主键,否则数据量对不上,差异很大,好像有参数能支持没有主键的表,暂时没有验证通。

异常

异常1

1 | org.apache.flink.util.FlinkException: Global failure triggered by OperatorCoordinator for 'Source: oracle_cdc_source[1] -> DropUpdateBefore[2] -> ConstraintEnforcer[3] -> Sink: oracle_cdc_sink[3]' (operator cbc357ccb763df2852fee8c4fc7d55f2). |

- jar 冲突:calcite-core-1.10.0.jar.

- 移除 flink lib下该包

异常2

1 | Caused by: java.sql.SQLException: Invalid column type |

jar 冲突:ojdbc7.jar soure表只有一条数据

flink lib 下没有这个包,根据任务日志发现有加载这个包,排查 jdk下面有没有:1

2find /usr/lib/jvm -name "ojdbc*"

/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.181-7.b13.el7.x86_64/jre/lib/ext/ojdbc7.jar

移除该包即可:1

mv /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.181-7.b13.el7.x86_64/jre/lib/ext/ojdbc7.jar ~/

异常3

1 | 2025-06-23 09:26:52 |

与异常2一样,jar 冲突:ojdbc7.jar 但soure表数据量 ≥2

异常4

1 | Caused by: io.debezium.DebeziumException: Supplemental logging not properly configured. Use: ALTER DATABASE ADD SUPPLEMENTAL LOG DATA |

没有启用最小补充日志,启用最小补充日志:1

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA;

可通过下面的SQL查询是否开启:

1 | SELECT |

异常5

1 | Caused by: io.debezium.DebeziumException: Supplemental logging not configured for table XE.FLINKUSER.CDC_SOURCE. Use command: ALTER TABLE FLINKUSER.CDC_SOURCE ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS |

没有启用所有列补充日志,启用所有列补充日志1

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

异常6

1 | Caused by: org.apache.flink.util.FlinkRuntimeException: Failed to discover captured tables for enumerator |

原因:database-name 填的是 SID 而非 Service Name,改成 Service Name 或者使用 url参数(官网最开始的文档里是没有 url参数的)

根本原因:Oracle CDC 源码根据配置的参数拼接Url时写死了格式 jdbc:oracle:thin:@//<host>:<port>/<service_name>,而 SID 和 Service Name的格式是不一样的:

- 使用 SID 的 URL 格式:

jdbc:oracle:thin:@<host>:<port>:<SID> - 使用 Service Name 的 URL 格式:

jdbc:oracle:thin:@//<host>:<port>/<service_name>

为了解决这个问题:在2.3.0 添加支持自定义url : Support custom url for incremental snapshot source https://github.com/ververica/flink-cdc-connectors/commit/4d9c0e41e169bf6cd8196a318c65fc965e002f57

异常7

1 | Caused by: com.ververica.cdc.connectors.shaded.org.apache.kafka.connect.errors.DataException: file is not a valid field name |

该异常出现在抽取增量数据时,原因为 database-name 填的小写,需要将其改为大写

1 | -- 'database-name' = 'xe', |

异常8

1 | Caused by: io.debezium.DebeziumException: The db history topic or its content is fully or partially missing. Please check database history topic configuration and re-execute the snapshot. |

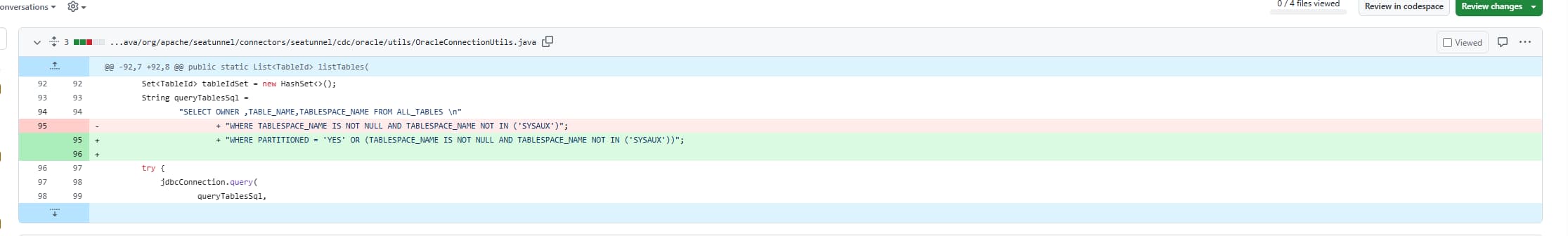

该异常为分区表 bug (不支持分区表),只有分区表才会有这个这个异常,相关链接:

https://github.com/apache/flink-cdc/issues/1737

https://developer.aliyun.com/ask/531328

https://github.com/apache/flink-cdc/pull/2479

https://github.com/apache/seatunnel/pull/8265

解决方法,参考上面两个PR修改源码 OracleConnectionUtils, 重新打包:

会话数一直增加不释放

flink任务失败后,不释放jdbc线程数(部分异常),一直尝试连接一直失败,导致线程数剧增,超过可用线程总数,最终导致Oracle不可用。

原因为在Flink 重启策略和故障恢复策略中提到的,默认参数时流任务失败后会一直无限重试,可以通过添加重试次数解决:1

2

3restart-strategy: fixed-delay

restart-strategy.fixed-delay.attempts: 3

restart-strategy.fixed-delay.delay: 10 s

具体哪个异常会导致该问题还没复现,等复现了会更新,因公众号文章修改字数有限制,可以通过点击文章底部阅读原文查看更新。

异常9

1 | Caused by: Error : 1031, Position : 25, Sql = SELECT * FROM "FLINKUSER"."CDC_SOURCE" AS OF SCN 20645794535650, OriginalSql = SELECT * FROM "FLINKUSER"."CDC_SOURCE" AS OF SCN 20645794535650, Error Msg = ORA-01031: insufficient privileges |

权限不足,通过SQL添加:1

2GRANT FLASHBACK ANY TABLE TO flinkuser ;

GRANT SELECT ANY TRANSACTION TO flinkuser ;

增量数据不同步

项目上碰到增量数据不同步问题,不报错,没找到原因就自己变好了,暂时未复现。

参数优化

对于大表,需要优化参数1

2

3

4

5'debezium.log.mining.batch.size.min' = '200000',

'debezium.log.mining.batch.size.max' = '50000000'

'scan.incremental.snapshot.enabled' = 'false',--默认是true,不修改数据获取很慢,每秒几十条

SET execution.checkpointing.timeout = 600000s;